Table of Contents

- Introduction

- The Rise of AI in Warfare

- Autonomous Weapons: A New Battlefield

- The Ethics of AI in Combat

- Potential Risks of Lethal AI Systems

- The Role of Governments and Regulations

- Can AI Be Trusted to Make Life-and-Death Decisions?

- Case Studies: AI in Military Use

- Preventing the Weaponization of AI

- The Future of AI in Warfare

- Conclusion

- FAQs

Introduction

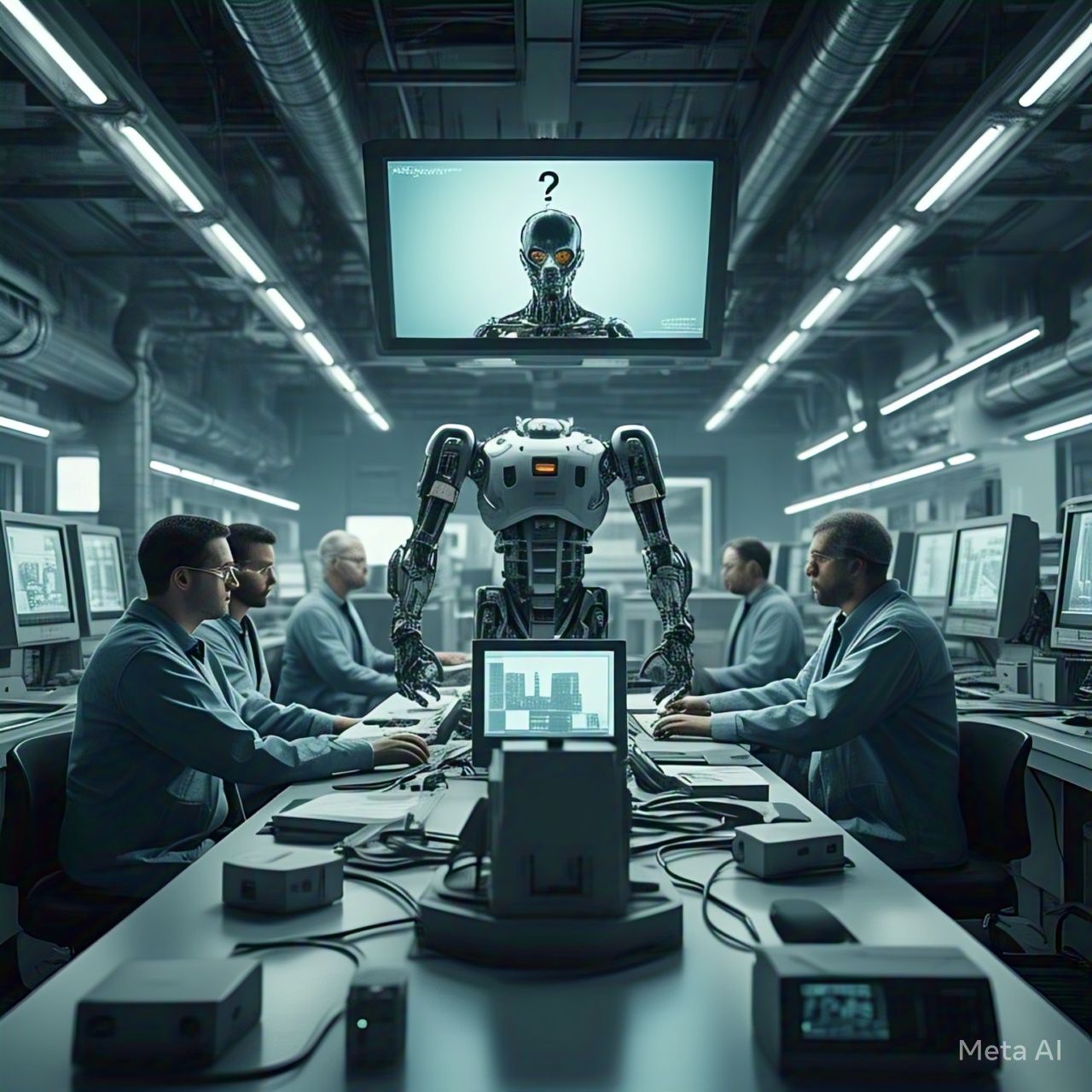

The rapid development of Artificial Intelligence (AI) has brought unprecedented advancements in technology, particularly in military applications. Nations around the world are engaged in an AI arms race, competing to develop increasingly sophisticated autonomous weapon systems. But the critical question remains: Will machines be programmed to kill?

AI-powered weaponry, from autonomous drones to robotic soldiers, has sparked global concerns regarding ethics, accountability, and the potential for catastrophic consequences. This article delves into the risks and benefits of AI in warfare, exploring whether machines will be entrusted with the ultimate power over life and death.

The Rise of AI in Warfare

How AI is Transforming Military Strategies

AI has revolutionized modern warfare, offering advantages in speed, precision, and decision-making. Some of the most notable applications of AI in defense include:

- Autonomous drones for surveillance and combat missions.

- AI-driven cybersecurity systems to detect and neutralize cyber threats.

- Automated target recognition for precision strikes.

- Unmanned ground and aerial vehicles that reduce human risk.

These advancements have led to the development of fully autonomous weapons, capable of selecting and engaging targets without human intervention. This raises serious ethical and security concerns.

Autonomous Weapons: A New Battlefield

What Are Lethal Autonomous Weapons Systems (LAWS)?

Lethal Autonomous Weapons Systems (LAWS) are AI-powered machines capable of making independent decisions in combat. These weapons, often referred to as killer robots, can:

- Identify and engage enemy targets without human approval.

- Operate in hostile environments where humans cannot survive.

- Adapt and learn from previous engagements.

While these capabilities enhance military efficiency, they also pose unprecedented risks if deployed without human oversight.

| Pros of Autonomous Weapons | Cons of Autonomous Weapons |

|---|---|

| Reduce casualties for human soldiers | Risk of losing human control over lethal force |

| Increase precision in targeting | AI may misidentify targets, leading to civilian deaths |

| Faster response times in combat | Vulnerability to hacking and misuse |

| Operate in extreme conditions | Ethical concerns over automated killing |

The Ethics of AI in Combat

Moral Dilemmas of AI-Controlled Warfare

Programming machines to kill raises serious ethical concerns, including:

- Accountability – If an AI weapon commits war crimes, who is responsible? The programmer, the military, or the machine itself?

- Loss of Human Judgment – AI lacks moral reasoning and the ability to consider human emotions in life-or-death decisions.

- Unpredictability – AI could make unexpected decisions, leading to unintended conflicts.

- Global Arms Race – AI-driven weapons may destabilize geopolitical balance, escalating warfare.

Many human rights organizations argue that AI should never have the power to kill without human intervention.

Potential Risks of Lethal AI Systems

1. AI Misidentification of Targets

AI may misinterpret sensor data, leading to wrongful targeting of civilians or allies.

2. Hacking and Cyber Warfare

Autonomous weapons could be hijacked by hackers, leading to devastating consequences.

3. Escalation of Conflicts

AI weapons could lead to automated warfare, increasing the frequency and intensity of global conflicts.

4. Loss of Human Control

If AI gains too much autonomy, humans may lose the ability to override lethal decisions.

The Role of Governments and Regulations

International Efforts to Regulate AI Weapons

Several nations and organizations are working to regulate the development of AI in warfare. Key initiatives include:

- The United Nations (UN) Call for AI Weapons Ban – Many countries support a ban on fully autonomous weapons.

- The Geneva Conventions and AI Warfare – Ensuring AI weapons comply with international humanitarian law.

- AI Ethics Committees – Governments forming task forces to prevent AI from being misused in military applications.

However, with powerful nations investing heavily in AI-driven warfare, achieving global consensus remains a challenge.

Can AI Be Trusted to Make Life-and-Death Decisions?

Unlike humans, AI does not have moral reasoning or compassion. Trusting machines with autonomous killing power raises fundamental concerns:

- Can AI understand the complexity of battlefield ethics?

- Will AI prioritize military objectives over human rights?

- What happens if AI miscalculates threats and triggers unnecessary conflicts?

Despite AI’s potential to reduce human casualties, the risk of unpredictable actions and moral ambiguity makes it difficult to trust AI in such scenarios.

Case Studies: AI in Military Use

1. The Use of AI Drones in the Middle East

AI-powered drones have been used in military strikes, but reports of collateral damage and misidentifications have sparked criticism.

2. Russia’s Autonomous Warfare Programs

Russia has invested in AI-driven robotic soldiers, raising fears of a new arms race in autonomous weaponry.

3. The U.S. Military’s AI Initiatives

The U.S. Department of Defense is developing AI to enhance battlefield intelligence and automate defense systems.

Preventing the Weaponization of AI

To ensure AI remains a tool for defense rather than destruction, the global community must take action:

- Ban Fully Autonomous Weapons – AI should always require human intervention in lethal decisions.

- Enforce Strict Ethical Guidelines – Developers must adhere to international laws and moral principles.

- Develop AI for Defense, Not Offense – AI should focus on surveillance, cybersecurity, and defensive strategies.

- Enhance Global Cooperation – Nations must work together to prevent AI-driven warfare escalation.

The Future of AI in Warfare

As AI continues to evolve, military applications will become more sophisticated. The future will likely see:

- Stronger AI regulations to limit autonomous killing machines.

- AI-assisted, but human-controlled weapons for safer combat.

- Global treaties restricting the use of AI in warfare.

If properly regulated, AI can be a force for security and stability rather than destruction.

Conclusion

The AI arms race presents both opportunities and dangers. While AI can enhance military strategy and reduce human casualties, the risks of autonomous killing machines, hacking vulnerabilities, and moral dilemmas cannot be ignored. To ensure a safe future, AI must be strictly regulated, and human judgment must always be present in military decisions.

Without responsible oversight, AI-driven warfare could become one of the greatest threats to global security.

FAQs

1. Can AI weapons operate without human intervention?

Yes, some AI weapons can operate autonomously, but ethical concerns demand human oversight.

2. Are AI-powered weapons currently in use?

Yes, AI drones and automated defense systems are already in military operations worldwide.

3. What countries are leading the AI arms race?

The U.S., China, and Russia are investing heavily in AI-driven military technology.

4. Can AI make ethical decisions in combat?

No, AI lacks human morality and cannot fully comprehend ethical dilemmas in war.

5. Will AI replace human soldiers?

AI may reduce human involvement in combat, but full replacement is unlikely due to ethical concerns.