Table of Contents

- Introduction

- The Role of AI in Modern Healthcare

- AI-Assisted Diagnosis and Treatment

- Robotic Surgery: Enhancing Precision or Introducing Risk?

- Ethical Considerations in AI-Driven Healthcare

- Can AI Handle Emergency Medical Decisions?

- The Potential Risks of AI in Medicine

- Regulatory Measures and Safety Protocols

- Case Studies: Successes and Failures of AI in Healthcare

- The Future of AI in Life-and-Death Decisions

- Conclusion

- FAQs

Introduction

Artificial Intelligence (AI) is transforming healthcare, promising faster diagnoses, personalized treatments, and even robotic-assisted surgeries. However, the question remains: Can AI be trusted with life-or-death decisions?

While AI-powered healthcare systems offer unmatched precision and efficiency, they also raise concerns regarding ethics, liability, and reliability. This article explores the role of robots in healthcare, the potential benefits and risks of AI-driven decisions, and whether we can truly trust machines with human lives.

The Role of AI in Modern Healthcare

How AI is Changing Medical Practices

AI is revolutionizing healthcare by providing data-driven insights, automating tasks, and improving medical outcomes. Key applications include:

- Medical Imaging Analysis – AI detects anomalies in X-rays, MRIs, and CT scans with higher accuracy than human radiologists.

- AI-Powered Chatbots – Virtual assistants diagnose symptoms and recommend treatments.

- Automated Drug Discovery – AI accelerates research by identifying potential drugs faster than traditional methods.

- Remote Patient Monitoring – AI tracks vitals and detects early signs of complications.

The integration of AI into medicine is reducing errors and increasing efficiency, but should AI ever have the final say in critical medical decisions?

AI-Assisted Diagnosis and Treatment

How Accurate is AI in Diagnosing Diseases?

AI algorithms can analyze millions of medical records within seconds, spotting patterns undetectable to human doctors. Examples include:

- IBM Watson Health – Uses AI to recommend cancer treatments by analyzing medical literature.

- Google DeepMind’s AlphaFold – Predicts protein structures, aiding in disease understanding.

- AI in Diabetes Management – Apps like BlueDot predict and manage diabetes risk factors.

However, AI is not infallible. Errors in data input, biases in algorithms, and unforeseen variables can lead to incorrect diagnoses, potentially putting lives at risk.

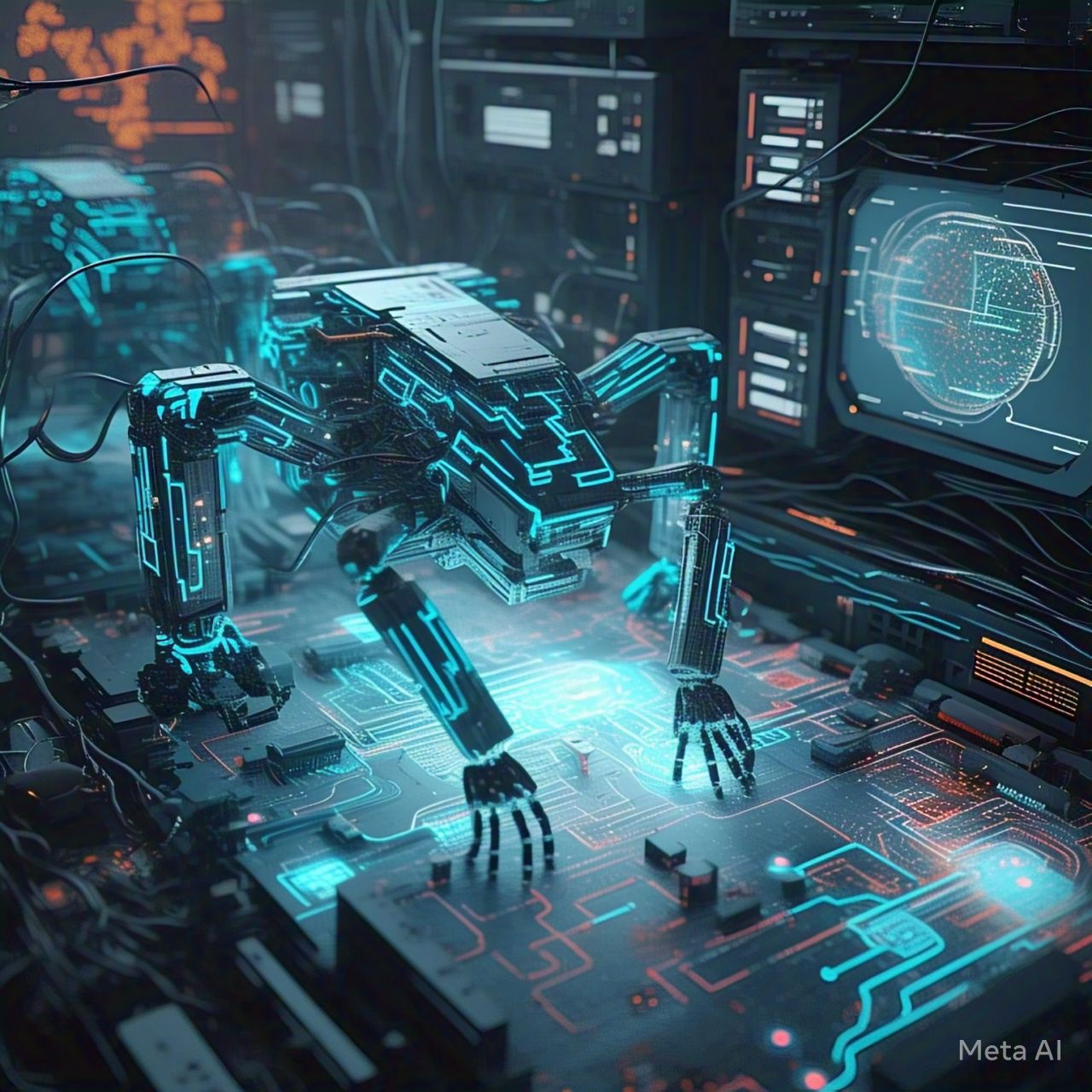

Robotic Surgery: Enhancing Precision or Introducing Risk?

Are AI Surgeons the Future?

Robotic surgery systems like da Vinci Surgical System assist human surgeons with:

- Higher precision and reduced human tremors.

- Minimally invasive procedures for faster recovery.

- Enhanced visualization of surgical sites.

However, AI-assisted surgery presents challenges such as:

| Pros of AI in Surgery | Cons of AI in Surgery |

|---|---|

| Increased accuracy | Lack of human intuition in unpredictable cases |

| Reduced complications | High initial and maintenance costs |

| Faster healing times | Potential for software malfunctions |

| Smaller incisions | Ethical concerns over liability in malpractice cases |

While AI enhances surgical precision, the absence of human judgment in critical moments is a major concern.

Ethical Considerations in AI-Driven Healthcare

Who is Responsible When AI Makes a Mistake?

AI is only as good as the data it learns from. If flawed data leads to misdiagnoses or incorrect treatments, who is liable?

- Doctors? – If they rely too heavily on AI recommendations?

- Developers? – If they program an algorithm with biases or errors?

- Hospitals? – If they deploy AI without proper regulation?

Without clear accountability, AI-driven healthcare raises serious ethical concerns.

Can AI Handle Emergency Medical Decisions?

AI in Critical Care: Friend or Foe?

In emergency rooms (ERs) and intensive care units (ICUs), AI is used to:

- Predict sepsis risk through early warning systems.

- Monitor patient deterioration using AI-powered wearables.

- Recommend urgent interventions based on real-time data.

However, trusting AI with split-second life-saving decisions remains debatable, as machines lack human intuition and compassion in high-stakes situations.

The Potential Risks of AI in Medicine

1. Bias in AI Algorithms

AI models trained on biased medical data may deliver skewed diagnoses, particularly for underrepresented groups.

2. Over-Reliance on Automation

Doctors may become overly dependent on AI, reducing their critical thinking skills.

3. Data Privacy Concerns

AI requires access to sensitive patient data, raising cybersecurity risks.

4. AI Malfunctions and Technical Failures

Glitches in AI-driven machines could lead to misdiagnoses, incorrect prescriptions, or surgical errors.

Regulatory Measures and Safety Protocols

How Can AI in Healthcare Be Made Safer?

Governments and medical institutions are implementing AI regulations, including:

- FDA Approval for AI in Medicine – Ensuring AI medical tools meet safety standards.

- Ethical AI Frameworks – Organizations like WHO and IEEE establish guidelines for AI in healthcare.

- AI Transparency Laws – Requiring AI decisions to be explainable and auditable.

Strict regulations and oversight are essential to ensure AI serves humanity responsibly.

Case Studies: Successes and Failures of AI in Healthcare

1. AI Detecting Cancer Early

Google’s AI-powered mammogram analysis detected breast cancer better than human radiologists.

2. AI Misdiagnosing Patients

In 2019, IBM Watson AI recommended incorrect cancer treatments, highlighting the risks of AI errors.

3. AI in COVID-19 Detection

AI models accurately predicted COVID-19 outbreaks, aiding global response efforts.

The Future of AI in Life-and-Death Decisions

What’s Next for AI in Medicine?

The future of AI in healthcare will likely see:

- Greater collaboration between AI and human doctors.

- Improved AI transparency to prevent medical errors.

- Tighter regulations to ensure safety and ethics.

- Advanced AI models that can detect and treat diseases faster than ever.

Despite its potential, AI should always function as a tool to assist, not replace, human doctors.

Conclusion

AI is revolutionizing healthcare, offering enhanced precision, efficiency, and accessibility. However, entrusting AI with life-or-death decisions remains controversial. While AI can assist in diagnosing diseases, performing surgeries, and monitoring patients, it lacks human intuition, ethics, and accountability.

The key to integrating AI in healthcare safely lies in regulation, oversight, and continued human involvement. AI should serve as an aid, not an autonomous decision-maker in critical medical scenarios.

Until AI can fully replicate human judgment, emotions, and ethical reasoning, we must tread cautiously in allowing machines to hold life-or-death power.

FAQs

1. Can AI replace human doctors?

No, AI can assist but not replace human doctors due to its lack of moral reasoning and compassion.

2. Has AI made medical errors?

Yes, AI has misdiagnosed patients and recommended incorrect treatments in the past.

3. Are AI-powered surgeries safe?

AI-assisted surgeries have high success rates, but human oversight is necessary to prevent errors.

4. Can AI predict diseases before symptoms appear?

Yes, AI models can detect patterns indicating early disease development.

5. How is AI regulated in healthcare?

Regulatory bodies like the FDA and WHO ensure AI tools meet safety and ethical standards before deployment.